You should probably just use AWS. Or Azure.

The cloud marketing departments spend gazillions of dollars to lure teams to 'migrate to the cloud'.

AWS is the default buying choice like IBM used to be.

A contrarian opinion arguing against the cloud then naturally generates lots of waves.

But, does it make sense to be a cloud contrarian?

The case against the cloud

Basecamp has recently announced that they'll be leaving the cloud.

Their stated reasons are monetary ("paying over half a million dollars per year for database (RDS) and search (ES) services from Amazon") and also based on values ("[it's] tragic that this decentralized wonder of the world is now largely operating on computers owned by a handful of mega corporations").

Basecamp operates on such a scale that owning a rack in a datacenter might yield some financial savings. Their devs can surely handle a few new sysadmin tasks.

My friend Karl Sutt argued against the cloud from a more general perspective. I will steelman his argument before offering my take.

He based his critique on the following observations:

- Cloud is expensive to operate and maintain - not just in terms of the direct spend but also when you include the opportunity cost.

- You are not Netflix, so you don't need to replicate their setup for your non-planetscale app.

- There is too much choice of cloud services you can use, and we developers can easily overcomplicate the design when a simple tech stack would do.

Cloud is too expensive

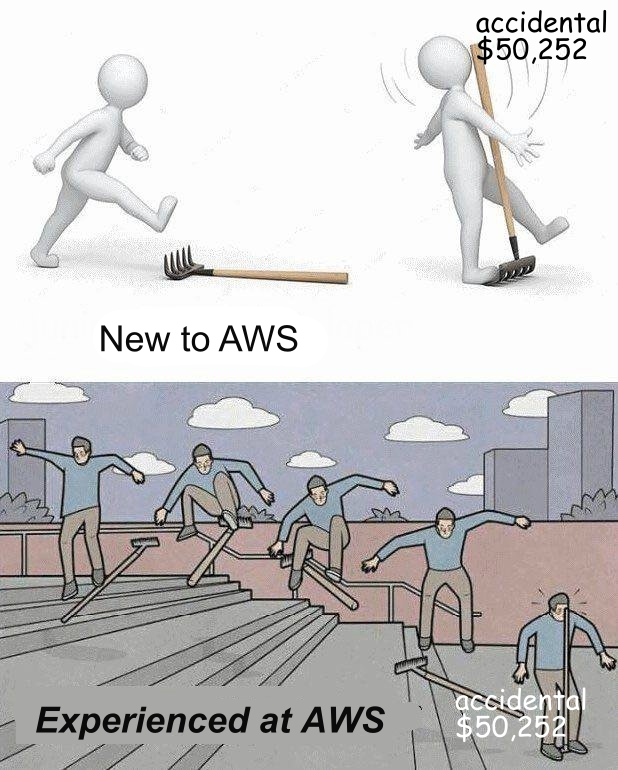

How easy is it to overpay for computers rented from Amazon? Too easy:

Any sufficiently complex enterprise backend will at some point use services that will create very unpopular line items on the monthly invoice.

And your backlog likely contains user stories such as "As a CTO, I want to slash my cloud spend by 50% to get the CFO off my ass."

In fact, the unavoidability of surprise AWS bills has created a cottage industry of AWS cost-reduction consultants. Given they exist, the cloud must be expensive, at least for some.

You don't run Netflix

Your startup idea might become the new Netflix. Before your SaaS metrics prove that trajectory inevitable, you might not need microservices. Or multi-region redundancy. You'd burn through your seed round before you'd even have a chance to validate your idea.

The tragedy of the hotel breakfast buffet

One factor contributing to the overly complex (and expensive) cloud application architectures is the plethora of services you have at your disposal.

The cloud is not just VMs. You have managed databases, message queues, CDNs, and a ton of other things.

We developers are hyper-curious beings, which is a polite way of saying that we like trying out cool shit! AWS (and Azure and GCP) makes it way too easy.

These managed services do enable computing on demand, and they also enable unnecessarily complex architectures.

When there's little oversight over the cloud spend, we developers surely have a tendency to overeat!

The case for the cloud

I think Karl's arguments are worth another look, if only because the alternative (running or renting bare metal servers) can also be costly, expensive, and has a non-zero opportunity cost, too.

Since this question cannot be conclusively answered the same for all audiences, let's focus on the needs of an imaginary B2B SaaS startup that is bootstrapped.

Being bootstrapped means having to watch over your budget with hawk's eyes. It's your money, after all.

It means you are focused on finding (and proving) your product/market fit quickly, thus finding a way to profitability quickly.

Assuming that you have one or two developers who are equally skilled in both cloud-native as well as "handcrafted" architecture, which way should you go - cloud-first, or rent (or buy) your own servers?

Comparing direct costs

You could rent dedicated servers essentially for free.

For instance, have a look at the server auctions at Hetzner. At the time of writing, I could get a box with Intel Core i7-6700 and 64 GB RAM for about €40 per month.

That's less than I would spend on a company phone plan for one employee. As a business owner, such an expense is invisible to me.

And yet, one or two servers in this configuration could power my app for a year or two without breaking a sweat.

Try configuring a similarly specced machine in the cloud, and you're likely looking at a monthly bill that's upwards of $300.

However, this comparison only makes sense if you're migrating apps from your datacenter to the cloud, and utilizing VMs is the first step in the process. You would not necessarily make the same decision when starting from scratch. More on that later.

And yet, you are still probably going to run your app in the cloud for free.

That's because the cloud vendors really want your business.

Consider Microsoft for Startups. You can get up to $150,000 in Azure credits to develop and operate your app, not to mention Office and Visual Studio licenses and other incentives.

Once you outgrow the program, you won't be paying list prices, either. Big customers have enterprise agreements and don't pay anything near the retail prices.

That said, yes, you could at some point reach the same conclusions as Basecamp. I am not arguing that the cloud is always cheaper. When we limit our inquiry to the monthly cloud bills alone, it may not be. For instance, if your startup does not qualify for the incentives, your financial decisions will be different.

Comparing opportunity costs

My conclusions differ from Karl's as we consider the opportunity costs.

Karl wrote:

[T]here is productivity and opportunity cost from having to understand, deal with and work around the idiosyncracies of complex cloud platforms. This effort is much better spent on delivering value to users.

He proposes to put your customers' needs above all else and to achieve that, do the following:

- Optimise for operational simplicity - by choosing a deployment target that's easiest to maintain and operate. He mentions Heroku, Render or Fly as an alternative to AWS or GCP, which is debatable - Heroku is neither cheap nor easy to operate once you outgrow the cheapest dynos. I have no experience with the other two.

- Optimise for internal simplicity - I think he argues for the simplest possible architecture here, proposing to use "boring technologies" that are well understood.

- Focus on delivering value to your users - having this mindset should drive all technical decisions and you should evaluate every choice not on its technical merits alone but primarily on whether it adds the most value to the people using the product.

I won't argue against any of that since I share his beliefs. I differ in how I would realize them.

Recall that in our example, we're trying to find a product/market fit for our bootstrapped startup. Delivering value to users is our job #1.

Therefore, I would rather direct my team's energy toward customer development and shipping features quickly than toward building our own infra.

Even as operating your own LAMP stack server, for example, is a well-documented proposition, there are adjacent concerns that you have to take into account, such as security, regulatory compliance (hello, GDPR!), reliability, disaster recovery, etc.

Cloud vendors do a remarkable job of taking care of these mundane details (for a fee).

You might find out, for instance, that serverless architecture is a good fit for your use case. Instead of running a full VM with all the overhead of following the security bulletins and applying patches on the regular, you could just deploy to Azure Functions or AWS Lambda, and only worry about your product's features.

Indeed, my main case in favor of deploying to the cloud by default rests primarily on the extra abstraction layers that the cloud vendors offer on top of mundane VMs.

It's not that you couldn't run your own serverless stack on your own hardware. Why would you spend your precious time on that, however, when your job #1 is finding and satisfying your first customers?

Finding the right answer

Since this polemic runs on a very high level, I won't pretend to have answers for every scenario.

There are indeed situations when running your own VM (or a bare-metal server) may be preferable to renting machines from Amazon (or Microsoft).

To avoid the "overeating trap", I would propose aligning the incentives of the technical team with the organization's goals and values.

There are such things as budgets, and the CTO should enforce a rigid discipline with regard to cloud spending.

I've seen teams run completely amok having the right to power up an arbitrary number of cloud resources at will. That's not something a bootstrapped startup can afford. And a CFO at a Fortune 500 company would also come down knocking on doors and asking tough questions, eventually.

When comparing your choices, I would propose the following metrics:

- Time to market - time is your most valuable resource besides your people. How can you ship stuff most quickly?

- Time to change - you'll need to make a lot of decisions and make a lot of changes. Some of them will impact your code and require new resources, e.g., a new type of database. How quickly can you provision these? And once you don't need a particular resource, how easy is it to retire it and stop paying for it?

- Time to onboard - how easy is it to onboard new developers on your stack? This is where "AWS is the new IBM" comes from. Pretty much every developer knows AWS these days, at least "kinda, sorta" (AWS is huge).

Figure these out, and only then compare the monthly spend. Chances are that it won't matter much in the first few years of your startup anyway.

Your ability to attract paying customers and find a path to profitability will. Make sure your technical architecture is 100% aligned with this.

P.S.

If you feel like we'd get along, you can follow me on Twitter, where I document my journey.

Published on