How to set up automated deployment of a Linux function app on Azure Functions Consumption plan

Introduction

Azure Functions is a feature-rich platform-as-a-service (PaaS) offering of the Azure cloud, competing with AWS Lambda in the serverless space. It is particularly well suited for event-driven systems and, by its design, encourages a modular, decoupled system architecture, e.g., by way of micro-services.

About serverless

The "serverless" moniker is both appealing and misleading.

The appeal is straightforward: only care about your code. The platform takes care of the runtime and dependencies, among other things.

The misdirection lies in the moniker itself. For one, serverless should not be equated with zero thinking about infrastructure and deployment. It also presents no threat to the careers of DevOps professionals.

Think about serverless the way you would think about renting a serviced apartment. It comes with extra features you won't find in a standard apartment, e.g., there's weekly cleaning and no extra charge for a plumber fixing the pipes.

Alas, there are also things you can't do. A serverless platform puts hard limits on how you can configure the system, and when there's a feature that's missing, well, you are out of luck - no sudo apt install $package for you.

For a great many applications, the benefits outweigh the costs.

And to achieve maximum benefits, it's worth investing in your CI/CD pipeline. After setting up the automated deployment of your serverless app, you aren't really thinking about servers at all.

Why you should read this tutorial

I've gone through multiple code samples and tutorials, looking for guidance on configuring the CI/CD pipeline for an app running on Azure Functions. There are many, many out there - Microsoft Docs are excellent and are usually my first place to inquire.

That said, given the various tiers and plans Azure Functions offers, not every tutorial works out of the box on every plan.

The most prominent entrance to the Azure Functions world is through the Consumption plan since you are not being charged any flat fees regardless of usage but only for when your code actually runs. It is indeed this plan that I chose for my experiments and ended up a little bruised along the way.

This tutorial/explainer is tailored to fellow hackers setting up their apps on the Linux Consumption plan. It is in many ways generic in that the principles can be applied to the other Azure Functions plans, though. I highlight any solutions specific to the Consumption plan.

When (not) to worry about this

I would not worry about this in the initial stages of a small-ish project where you are the only developer.

You can always create resources manually in Azure or via Azure CLI, and deployment from VS Code or Visual Studio is just a few clicks away.

The time to think about automated deployment is when another developer joins the team or when the infrastructure becomes stable, and you get tired of manually deploying several times a day, whichever comes first.

By "stable infrastructure", I mean: you have figured out which database you need, you have created other supplemental resources like the Service Bus namespace, and now you spend most of your effort on writing the application code.

Pre-requisites

I assume some experience developing on the Azure Functions platform and familiarity with general Azure topics. This article covers setting up a deployment pipeline on Github; if you host your code elsewhere, e.g., on Gitlab, you'll have to adjust the Github-specific bits.

Furthermore, this article addresses a specific scenario for a Functions app running on the Consumption plan on Linux. If your app runs on Windows or on a Premium or App Service plan, then some of the quirks and limitations addressed below do not apply to you.

The take-away promise

After reading this tutorial, you will learn about:

- how to understand the ARM templates and how to use them to describe the resources you want to deploy to Azure declaratively

- how to deploy the resources from ARM templates with the Azure CLI

- how to use Github workflows to trigger deployments based on repository events and on-demand

My sole goal is to empower you to set up your own deployment pipeline for your very own project or app in Azure Functions.

About this example

We'll go beyond "Hello world" and look at the automation pipeline of JComments, my Open Source project aimed at publishers using the JAM stack.

It provides commenting functionality to static websites. One API is used at build time to bake the existing comments into the generated HTML. The same API is then called by JavaScript to fetch any comments posted after the page was built. Another API processes incoming comments from web pages.

The project is written in TypeScript and runs on Node.js, which is one of the languages supported by Azure Functions. It uses a Postgres database, and when deployed to Azure, it can take advantage of the managed Azure Postgres service. A few event-driven use cases utilize a message queue, which in Azure means using the Service Bus.

The architecture is pretty vanilla, so the chances are that you'll be able to derive learnings from this article to apply to your own app and its setup on Azure.

The setup

The code lives on Github, which also provides the CI/CD pipeline.

You'll find the workflows under .github/workflows, and we'll take a look at them at the end of this tutorial.

The ARM templates and scripts are hosted in infra/azure. We will cover these first.

It's common to set up your resources manually at first - I did that, and likely you did, too. Creating an ARM template manually from scratch would be an exercise only a masochist would enjoy. Even taking an exported template and making a few tweaks would usually take a few commits and failed deploys to get right.

The first step is to export the ARM templates of your resources so that we can edit and generalize them.

Preparing ARM templates for automated deployment

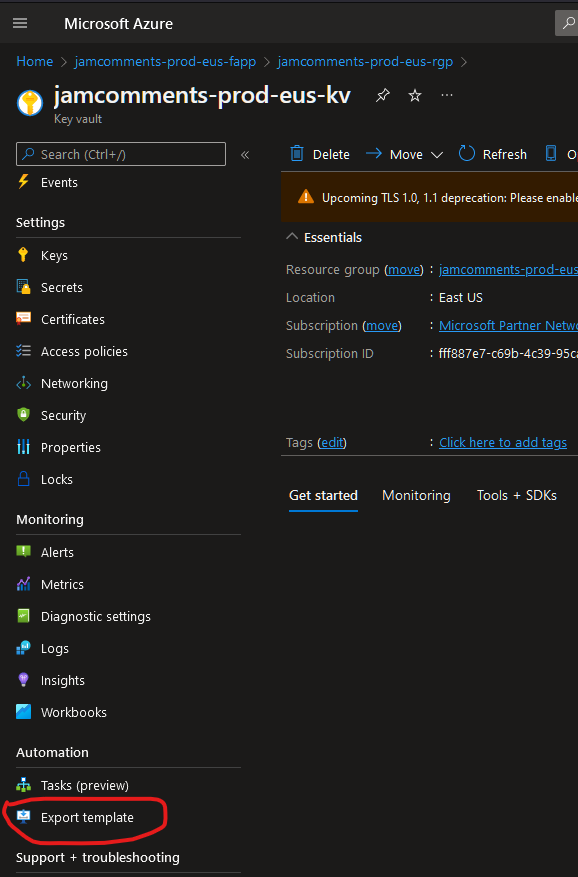

Navigate to the resource and look up the link "Export template" under the menu item "Automation," just above "Support + troubleshooting."

Click it and wait until Azure generates the template, then save it somewhere where you can open it locally with your favorite editor.

The exported template will have a lot of specific bits you would want to parametrize if you were to use it for deployment to another resource group or subscription. In particular, you'll want to think about:

- naming conventions, which are help organize resources by a common factor such as environment or location,

- configuration parameters, esp. if they could be different from one environment to another

- dependencies on other resources, which can go either way (consumed or provided or both)

Let's have a look at the ARM template of an App Service Plan when exported:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"serverfarms_ASP_jamcommentsstaging_9cbf_name": {

"defaultValue": "ASP-jamcommentsstaging-9cbf",

"type": "String"

}

},

"variables": {},

"resources": [

{

"type": "Microsoft.Web/serverfarms",

"apiVersion": "2021-03-01",

"name": "[parameters('serverfarms_ASP_jamcommentsstaging_9cbf_name')]",

"location": "East US",

"sku": {

"name": "B1",

"tier": "Basic",

"size": "B1",

"family": "B",

"capacity": 1

},

"kind": "linux",

"properties": {

"perSiteScaling": false,

"elasticScaleEnabled": false,

"maximumElasticWorkerCount": 1,

"isSpot": false,

"reserved": true,

"isXenon": false,

"hyperV": false,

"targetWorkerCount": 0,

"targetWorkerSizeId": 0,

"zoneRedundant": false

}

}

]

}What you would want here is not to have the resource name hard-coded and (optionally) to extract the SKU and properties to parameters that can change independently of the template, e.g., from one environment to another.

Including a parameters template is a convenient way of achieving this. You can also supply parameters from the command-line for any values you don't want to be persisted in your code repository, e.g., secrets.

A brief interlude about the naming convention

You'll want to think about the naming conventions to use and take into account aspects of your infrastructure that won't change as well as those that might.

For example, you may want to make the location of the app configurable and to use multiple environments that are deployed separately and may have different sizing profiles. In our example, we'll have staging and production. As you will see, we'll be able to add another very easily, perhaps development.

The naming convention I'll use here will use jcomm- as the leading prefix for everything. The next segment will be the environment shorthand, staging- for the staging environment and prod- for production.

The final segment if the naming prefix will be the location. Here, we'll be using "East US" and shorten it to eus- for the prefix.

For each resource we'll pick a suitable abbreviation. For an App Service Plan, let's use asp. That's also the only part that the template will decide for itself, so to speak.

The big advantage of doing this is that you will immediately know what's what at first glance when you explore a resource group in the Azure portal. It's a decision you'd make at the beginning and then use consistently.

Pick whatever pattern that suits your needs best. Perhaps the location isn't important to you, or it won't ever change. Whatever. The point is to identify the commonalities as well as differences.

The set up, continued

To accommodate our requirements, the first part of the template will morph into this:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"prefix": {

"type": "String"

}

},

"variables": {

"componentName": "[concat(parameters('prefix'), '-asp')]"

},What about the sku and properties? Let's extract them to the parameters file and save it to a file. Later, we may want to merge the function app and its App Service plan to a single deployment template and do so for their parameters as well.

The parameters file then reads as follows:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentParameters.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"servicePlanSku": {

"value": {

"name": "Y1",

"tier": "Dynamic",

"size": "Y1",

"family": "Y",

"capacity": 0

}

},

"servicePlanProperties": {

"value": {

"perSiteScaling": false,

"elasticScaleEnabled": false,

"maximumElasticWorkerCount": 1,

"isSpot": false,

"reserved": true,

"isXenon": false,

"hyperV": false,

"targetWorkerCount": 0,

"targetWorkerSizeId": 0,

"zoneRedundant": false

}

}

}

}

With that set up separately, the final version of the App Service plan template will look like this:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"prefix": {

"type": "String"

},

"location": {

"type": "String"

},

"servicePlanSku": {

"type": "object"

},

"servicePlanProperties": {

"type": "object"

}

},

"variables": {

"componentName": "[concat(parameters('prefix'), '-asp')]"

},

"resources": [

{

"type": "Microsoft.Web/serverfarms",

"apiVersion": "2021-03-01",

"name": "[variables('componentName')]",

"location": "[parameters('location')]",

"sku": "[parameters('servicePlanSku')]",

"kind": "linux",

"properties": "[parameters('servicePlanProperties')]"

}

]

}As you can see, the name of the resource we're deploying has become a variable that takes the provided prefix from parameters and adds its own abbreviation to it. The resource is deployed from its ARM template and one or more parameter files together, as you will see below.

We would use the same logic for all the other parts of our stack.

The infrastructure

We will start with the infrastructure - Key Vault, Postgres, and Service Bus.

Azure Key Vault

An important part is the Azure Key Vault, which holds various secrets such as the database passwords and API keys to third-party services.

The Key Vault is both a provider and a consumer, at least in the sense that other parts of the stack will put their secrets into it when deployed.

From now on, I won't present the original ARM template and only highlight the final, edited template. I also won't include the parameters - again, the method to extract and then apply the parameters is the same. My recommendation is that your method to create resources should be consistent and follow the same logic so that adding a new resource type is as easy as possible.

The Key Vault template looks like this:

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"sku": {

"type": "object",

"metadata": {

"description": "keyvault sku"

}

},

"location": {

"type": "string"

},

"prefix": {

"type": "string"

},

"mailgunApiKey": {

"type": "securestring"

},

"mailgunSender": {

"type": "string"

},

"mailgunDomain": {

"type": "string"

}

},

"variables": {

"componentName": "[concat(parameters('prefix'), '-kv')]"

},

"resources": [

{

"type": "Microsoft.KeyVault/vaults",

"apiVersion": "2021-11-01-preview",

"name": "[variables('componentName')]",

"location": "[parameters('location')]",

"properties": {

"sku": "[parameters('sku')]",

"tenantId": "[subscription().tenantId]",

"accessPolicies": [],

"enabledForDeployment": false,

"enabledForDiskEncryption": false,

"enabledForTemplateDeployment": true,

"enableSoftDelete": true

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('componentName'), '/mailgunApiKey')]",

"dependsOn": [

"[resourceId('Microsoft.KeyVault/vaults', variables('componentName'))]"

],

"properties": {

"value": "[parameters('mailgunApiKey')]"

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('componentName'), '/mailgunSender')]",

"dependsOn": [

"[resourceId('Microsoft.KeyVault/vaults', variables('componentName'))]"

],

"properties": {

"value": "[parameters('mailgunSender')]"

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('componentName'), '/mailgunDomain')]",

"dependsOn": [

"[resourceId('Microsoft.KeyVault/vaults', variables('componentName'))]"

],

"properties": {

"value": "[parameters('mailgunDomain')]"

}

}

]

}Notice the configuration property "enabledForTemplateDeployment": true - this will let other components write secrets into the Key Vault.

Also, notice that the deployment template expects three parameters specifying the "secrets" the app will consume to interact with the Mailgun API. Granted, only the API key is really a secret, and it's debatable whether the sender (an e-mail address) or the domain needs to be in the Key Vault. You'll make your own choices depending on how you want to handle similar considerations.

If you are wondering where the Mailgun secrets come from, you'll see soon enough.

The Service Bus namespace

Adding a Service Bus namespace to your project is surprisingly easy.

Pick a SKU depending on your usage - if you can get by with just Queues, you can go with the Basic pricing tier; to use Topics and Subscriptions, you'll need Standard.

We'll put the connection string to the Key Vault so that the apps can access it to send and receive messages.

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"sku": {

"type": "object",

"metadata": {

"description": "Microsoft.ServiceBus/namespaces sku"

}

},

"location": {

"type": "string"

},

"prefix": {

"type": "string"

}

},

"variables": {

"componentName": "[concat(parameters('prefix'), '-svcbus')]",

"keyVaultName": "[concat(parameters('prefix'), '-kv')]"

},

"resources": [

{

"type": "Microsoft.ServiceBus/namespaces",

"apiVersion": "2021-11-01",

"name": "[variables('componentName')]",

"location": "[parameters('location')]",

"sku": "[parameters('sku')]",

"properties": {

"zoneRedundant": false

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('keyvaultName'), '/serviceBusPrimaryConnectionString')]",

"dependsOn": [

"[concat('Microsoft.ServiceBus/namespaces/', variables('componentName'))]"

],

"properties": {

"value": "[listKeys(resourceId('Microsoft.ServiceBus/namespaces/authorizationRules', variables('componentName'), 'RootManageSharedAccessKey') ,'2017-04-01').primaryConnectionString]"

}

}

]

}Postgres Flexible Server

Lastly, let's deploy the Postgres database.

There's nothing fundamentally new here. I included it in the tutorial since I've had to tackle an unexpected problem when specifying multiple configuration parameters at the same time, and I reckon you might want to do that, too.

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"location": {

"type": "string"

},

"sku": {

"type": "object"

},

"storageSizeGB": {

"type": "int"

},

"administratorLoginPassword": {

"type": "securestring"

},

"databases": {

"type": "array"

},

"prefix": {

"type": "string"

}

},

"variables": {

"componentName": "[concat(parameters('prefix'), '-pg')]",

"administratorLogin": "jcstgadmin",

"keyVaultName": "[concat(parameters('prefix'), '-kv')]"

},

"resources": [

{

"type": "Microsoft.DBforPostgreSQL/flexibleServers",

"apiVersion": "2021-06-01",

"name": "[variables('componentName')]",

"location": "[parameters('location')]",

"sku":"[parameters('sku')]",

"properties": {

"version": "13",

"administratorLogin": "[variables('administratorLogin')]",

"administratorLoginPassword": "[parameters('administratorLoginPassword')]",

"availabilityZone": "1",

"storage": {

"storageSizeGB": "[parameters('storageSizeGB')]"

},

"backup": {

"backupRetentionDays": 7,

"geoRedundantBackup": "Disabled"

},

"network": {},

"highAvailability": {

"mode": "Disabled"

},

"maintenanceWindow": {

"customWindow": "Disabled",

"dayOfWeek": 0,

"startHour": 0,

"startMinute": 0

}

}

},

{

"type": "Microsoft.DBforPostgreSQL/flexibleServers/firewallRules",

"apiVersion": "2021-06-01",

"name": "[concat(variables('componentName'), '/AllowAllAzureServicesAndResourcesWithinAzureIps')]",

"dependsOn": [

"[resourceId('Microsoft.DBforPostgreSQL/flexibleServers', variables('componentName'))]"

],

"properties": {

"startIpAddress": "0.0.0.0",

"endIpAddress": "0.0.0.0"

}

},

{

"type": "Microsoft.DBforPostgreSQL/flexibleServers/configurations",

"apiVersion": "2021-06-01",

"name": "[concat(variables('componentName'), '/azure.extensions')]",

"dependsOn": [

"[resourceId('Microsoft.DBforPostgreSQL/flexibleServers', variables('componentName'))]",

"[resourceId('Microsoft.DBforPostgreSQL/flexibleServers/firewallRules', variables('componentName'), 'AllowAllAzureServicesAndResourcesWithinAzureIps')]"

],

"properties": {

"value": "PGCRYPTO",

"source": "user-override"

}

},

{

"copy": {

"name": "databasecopy",

"count": "[length(parameters('databases'))]"

},

"type": "Microsoft.DBforPostgreSQL/flexibleServers/databases",

"apiversion": "2021-06-01",

"name": "[concat(variables('componentName'), '/', parameters('databases')[copyIndex()].name)]",

"dependsOn": [

"[resourceId('Microsoft.DBforPostgreSQL/flexibleServers', variables('componentName'))]"

],

"properties": {

"charset": "UTF8",

"collation": "en_US.utf8"

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('keyVaultName'), '/pgHost')]",

"properties": {

"value": "[concat(variables('componentName'), '.postgres.database.azure.com')]"

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('keyVaultName'), '/pgAdminUsername')]",

"properties": {

"value": "[variables('administratorLogin')]"

}

},

{

"type": "Microsoft.KeyVault/vaults/secrets",

"apiVersion": "2019-09-01",

"name": "[concat(variables('keyVaultName'), '/pgAdminPassword')]",

"properties": {

"value": "[parameters('administratorLoginPassword')]"

}

}

]

}The problem I had was with the resource types Microsoft.DBforPostgreSQL/flexibleServers/firewallRules and Microsoft.DBforPostgreSQL/flexibleServers/configurations.

When both of them only had a dependency on [resourceId('Microsoft.DBforPostgreSQL/flexibleServers', variables('componentName'))], the firewall rules were deployed while the attempt to white-list the pgcrypto extension failed with a response code Conflict.

What gives?

I suspect that in that scenario, the orchestrator tries to write to the same configuration file, and either one of the attempts can fail since the other one may not be finished yet.

I solved it by making the second configuration depend on the first one being finished, but I think it's a hacky workaround at best.

If you were to add multiple custom configurations to my database server, which you very well might want to do, you would end up with a growing mess of dependencies that the orchestrator should figure out on its own, in my opinion anyway.

That, or I've overlooked a better way of handling this. Please let me know in the comments if you know it!

Another noteworthy feature I want to highlight is how the ARM template for the Postgres database puts its own secrets into the Key Vault. Unlike with the function app, which we'll cover shortly, we haven't given the Postgres any rights to read or write to the Key Vault here, and yet it can do so.

This is a little unexpected but very practical since the function app will need the Postgres credentials to talk to it.

The function app

Azure Functions lets you choose from several pricing plans that come with different performance and scaling characteristics.

The Consumption plan is great for getting started as you only pay for the actual usage, and there is a generous free monthly grant. It may also work great in production for apps that do not handle continuous sustained traffic.

The key drawback of the Consumption plan on Linux is that it does not support deployment from a ZIP package directly. Rather, you have to put the ZIP package somewhere, e.g., into a container in Azure Blob Storage, and provide the URL to the package.

This is clearly stated in the docs, but not every tutorial you will find on the Internet takes this into account. For example, if you thought you could just use the Azure Functions Github action to deploy your code, it won't work for Linux apps on the Consumption plan. The pipeline will finish green, but your code won't deploy.

The correct template to build on is this one referenced in Azure docs. But you'll need a little more code to make it work.

My solution is based on this fantastic article by Mark Heath, which got me to 80% of where I needed to be.

Below is the rather long ARM template for the function app, which also contains the App Service plan resource. You'll see that it does not specify the package URL - we don't have it yet.

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"parameters": {

"location": {

"type": "string"

},

"prefix": {

"type": "string"

},

"fullDeployment": {

"type": "bool"

},

"servicePlanSku": {

"type": "object",

"metadata": {

"description": "sku of Microsoft.Web/serverfarms"

}

},

"servicePlanProperties": {

"type": "object",

"metadata": {

"description": "properties of Microsoft.Web/serverfarms"

}

}

},

"variables": {

"componentName": "[concat(parameters('prefix'), '-fapp')]",

"servicePlanName": "[concat(parameters('prefix'), '-fasp')]",

"keyVaultName": "[concat(parameters('prefix'), '-kv')]",

"appSettings": {

"APPINSIGHTS_INSTRUMENTATIONKEY": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=appInsightsInstrumentationKey)')]",

"AzureWebJobsStorage": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=storageAccountConnectionString-jamcomments-fapp', ')')]",

"FUNCTIONS_EXTENSION_VERSION": "~4",

"FUNCTIONS_WORKER_RUNTIME": "node",

"MAILGUN_API_KEY": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=mailgunApiKey)')]",

"MAILGUN_DOMAIN": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=mailgunDomain)')]",

"MAILGUN_SENDER": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=mailgunSender)')]",

"PGDATABASE": "jamcomments",

"PGSSLMODE": "require",

"PGHOST": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=pgHost)')]",

"PGUSER": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=pgAdminUsername)')]",

"PGPASSWORD": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=pgAdminPassword)')]",

"SERVICEBUS_CONNECTION": "[concat('@Microsoft.KeyVault(VaultName=', variables('keyVaultName'), ';SecretName=serviceBusPrimaryConnectionString)')]"

}

},

"resources": [

{

"type": "Microsoft.Web/serverfarms",

"name": "[variables('servicePlanName')]",

"apiVersion": "2020-09-01",

"location": "[parameters('location')]",

"kind": "linux",

"properties": "[parameters('servicePlanProperties')]",

"sku": "[parameters('servicePlanSku')]"

},

{

"condition": "[parameters('fullDeployment')]",

"dependsOn": [

"[concat('Microsoft.Web/serverfarms/', variables('servicePlanName'))]"

],

"type": "Microsoft.Web/sites",

"apiVersion": "2021-03-01",

"name": "[variables('componentName')]",

"location": "[parameters('location')]",

"kind": "functionapp,linux",

"identity": {

"type": "SystemAssigned"

},

"properties": {

"enabled": true,

"serverFarmId": "[resourceId('Microsoft.Web/serverfarms', variables('servicePlanName'))]",

"siteConfig": {

"numberOfWorkers": 1,

"linuxFxVersion": "Node|14",

"alwaysOn": false,

"functionAppScaleLimit": 200,

"minimumElasticInstanceCount": 0

},

"clientAffinityEnabled": false,

"keyVaultReferenceIdentity": "SystemAssigned"

},

"resources": [

{

"condition": "[parameters('fullDeployment')]",

"type": "config",

"name": "appSettings",

"apiVersion": "2020-09-01",

"dependsOn": [

"[concat(parameters('prefix'), '-keyVaultAccessPolicy')]",

"[resourceId('Microsoft.Web/sites', variables('componentName'))]"

],

"properties": "[variables('appSettings')]"

}

]

},

{

"type": "Microsoft.Resources/deployments",

"apiVersion": "2020-10-01",

"name": "[concat(parameters('prefix'), '-keyVaultAccessPolicy')]",

"dependsOn": [

"[resourceId('Microsoft.Web/sites', variables('componentName'))]"

],

"properties": {

"mode": "Incremental",

"template": {

"$schema": "https://schema.management.azure.com/schemas/2015-01-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"resources": [

{

"type": "Microsoft.KeyVault/vaults/accessPolicies",

"name": "[concat(variables('keyVaultName'), '/add')]",

"apiVersion": "2019-09-01",

"properties": {

"accessPolicies": [

{

"tenantId": "[reference(resourceId('Microsoft.Web/sites', variables('componentName')), '2018-02-01', 'Full').identity.tenantId]",

"objectId": "[reference(resourceId('Microsoft.Web/sites', variables('componentName')), '2018-02-01', 'Full').identity.principalId]",

"permissions": {

"keys": [

"get"

],

"secrets": [

"get"

]

}

}

]

}

}

]

}

}

}

]

}On a side note, giving the function app an identity, which is this bit:

"identity": {

"type": "SystemAssigned"

},makes it possible to give it permissions to talk to the Key Vault and get a few secrets from it and make them available to the application code via environment variables. The permission is granted by the resource "type": "Microsoft.KeyVault/vaults/accessPolicies", and the secrets are inserted into the environment variables in the appSettings variables.

With the layout of the ARM templates being presented and explained, we can move on to the scripts that deploy them.

The deployment process

The infrastructure is deployed separately from the function app. The idea is to run the infrastructure deployment only when required so that most of the time, only the function app is deployed because only the application code changes frequently.

I won't go over the infrastructure deployment. A curious reader can peruse the infra/azure/platform/scripts/create-infra.sh Bash script.

The deployment scripts

The function app has two scripts: one that creates the function app and its related resources (storage account, app service plan, Service Bus topics), and another that deploys the application code.

First, let's have a look at how the function app and its specific resources are deployed (infra/azure/apps/api/scripts/create-funcapp.sh):

#!/bin/bash

set -e

: "${environment:?variable not set or empty}"

: "${fullDeployment:?variable not set or empty}"

if [ "$CI" == "true" ]

then

echo "Running on CI"

export AZURE_CORE_OUTPUT=none

else

echo "Not running on CI"

fi

echo "Starting function app deployment"

case "$environment" in

staging)

paramFolder="staging"

;;

prod)

paramFolder="prod"

;;

*)

echo "unknown environment: $environment" >&2

exit 1

esac

templates="infra/azure/apps/api/templates"

commonParams="$templates/params/common"

envParams="$templates/params/$paramFolder"

location="East US"

locationSymbol="eus"

prefix="jcomm-$environment-$locationSymbol"

rgp="$prefix-rgp"

echo "Creating resource group $rgp"

az group create --name "$rgp" --location "$location"

echo "Creating storage account"

az deployment group create \

-n "$prefix-storage-account" \

-g "$rgp" \

-f "$templates"/storage.json \

-p "$commonParams"/storage-account-parameters.json \

"$envParams"/storage-account-parameters.json \

prefix="$prefix" \

environment="$environment" \

locationSymbol="$locationSymbol" \

location="$location"

echo "Creating service bus topics"

az deployment group create \

-n "$prefix-sb-topics" \

-g "$rgp" \

-f "$templates"/svcbus-topics.json \

-p "$commonParams"/svcbus-topics-parameters.json \

"$envParams"/svcbus-topics-parameters.json \

prefix="$prefix"

echo "Creating function app"

az deployment group create \

-n "$prefix-fapp" \

-g "$rgp" \

-f "$templates"/funcapp.json \

-p "$commonParams"/funcapp-parameters.json \

"$envParams"/funcapp-parameters.json \

prefix="$prefix" \

location="$location" \

fullDeployment="$fullDeployment"

echo "Finished creating function app"As you can see, a few key parameters that the templates expect are defined here, such as the location of the resources and the prefix. The script needs two environment variables, environment and fullDeployment, supplied by the Github runtime triggering the workflow run.

Next, here is how the application code is deployed (infra/azure/apps/api/scripts/deploy-funcapp.sh):

#!/bin/bash

set -e

: "${environment:?variable not set or empty}"

echo "Get the storage connection string"

AZURE_STORAGE_CONNECTION_STRING=$(az storage account show-connection-string -g "jcomm-${environment}-eus-rgp" -n "jc${environment}eusstgacc" --query "connectionString" -o tsv)

container=jamcomments-funcapp-releases

timestamp=$(date +"%Y%M%d%H%M%N")

blobName="functionapp${timestamp}.zip"

echo "Create storage container"

az storage container create -n "$container" --public-access off --connection-string "$AZURE_STORAGE_CONNECTION_STRING"

echo "Zip the app"

zip -q -r "$blobName" . -x@.funcignore -x .funcignore

echo "Upload ${blobName} to storage"

az storage blob upload -c "$container" -f "$blobName" -n "$blobName" --connection-string "$AZURE_STORAGE_CONNECTION_STRING"

blobUrl=$(az storage blob url -c "$container" -n "$blobName" -o tsv --connection-string "$AZURE_STORAGE_CONNECTION_STRING")

expiry=$(date --date='5 years' +"%Y-%m-%dT%H:%M:%SZ")

echo "Get the SAS for the ZIP package"

sas=$(az storage blob generate-sas -c "$container" -n "$blobName" --permissions r -o tsv --expiry "$expiry" --connection-string "$AZURE_STORAGE_CONNECTION_STRING")

packageUrl="${blobUrl}?${sas}"

echo "Set the app settings to run from package URL"

az webapp config appsettings set -n "jcomm-${environment}-eus-fapp" -g "jcomm-${environment}-eus-rgp" --settings "WEBSITE_RUN_FROM_PACKAGE=$packageUrl"Quite a few steps to put the ones and zeroes to work, ain't it?

I think the inline comments paint a clear picture but to recap, you have to:

- create a private-access container in the Blob storage

- zip the application files

- upload the ZIP archive to the container

- ask Azure for the URL to the archive

- ask Azure to give you a read-only shared access signature (SAS) to the archive

- construct the full URL

- add a configuration to the function app to run from the zip archive we just uploaded

To be clear, this solution is specific to Linux function apps running on the Consumption plan. If you are on any other plan, you can just use the Azure Functions Github action and let it do the ZIP deployment for you.

I don't fully understand where this limitation comes from. If pressed, I would hypothesise that the Linux function apps on the Consumption plan do not have any dedicated storage (other than the Storage Account that's required for all Functions to operate - but then, why doesn't the runtime use it for the ZIP package hosting?) unlike all the other plans. In reality, I simply don't know, and the docs do not reveal the reasons.

With that said, let's see how this all is tied together in a Github workflow.

The Github workflow

The workflow featured below (see .github/workflows/azure-jam-comments-api.yml in the JComments repo) prepares the environment variables, runs the build and test actions, then calls the scripts (described in the previous section) to create the infrastructure in Azure and deploy the code.

The workflow can be triggered manually (see workflow_dispatch) or automatically by pushes to dev and main branches.

The very first time the workflow should run, or put differently, the very first time a given resource group and its contents are created in Azure, it should run manually with the fullDeployment flag set to true otherwise not all resources will have been created.

For our use case, it's important that we have the zip binary available. The action montudor/action-zip@v1 takes care of that.

name: Deploy JComments API to Azure Functions

on:

workflow_dispatch:

inputs:

environment:

description: 'Name of environment to deploy (staging, prod)'

required: true

default: 'staging'

fullDeployment:

description: 'Whether to do a full deployment or not'

required: true

default: 'false'

push:

branches:

- dev

- master

paths:

- 'infra/azure/apps/api/**'

- '.github/workflows/azure-jam-comments-api.yml'

- 'AzureCommentsApiGet/**'

- 'AzureCommentsApiPost/**'

- 'AzureCommentsApiNotify/**'

- 'sql/**'

- 'src/**'

- 'test/**'

env:

NODE_VERSION: '14.x' # set this to the node version to use (supports 8.x, 10.x, 12.x)

fullDeployment: ${{ github.event.inputs.fullDeployment || 'false' }}

defaults:

run:

shell: bash

jobs:

setupEnv:

name: Set up environments

runs-on: ubuntu-latest

timeout-minutes: 5

steps:

- name: Checkout

uses: actions/checkout@v2.3.4

with:

persist-credentials: false

- name: setup environment variables

id: setupEnv

run: |

echo "Setup environment and secrets"

if [ "${{ github.event.inputs.environment }}" = "staging" ] || [ "${{ github.ref }}" = "refs/heads/dev" ]; then

echo "::set-output name=environment::staging"

echo "::set-output name=githubEnvironment::Development"

elif [ "${{ github.event.inputs.environment }}" = "prod" ] || [ "${{ github.ref }}" = "refs/heads/master" ]; then

echo "::set-output name=environment::prod"

echo "::set-output name=githubEnvironment::Production"

fi

outputs:

environment: ${{ steps.setupEnv.outputs.environment }}

githubEnvironment: ${{ steps.setupEnv.outputs.githubEnvironment }}

name: jamcomments-${{ steps.setupEnv.outputs.environment }}-eus-fapp

build-and-deploy:

name: Build app and deploy to Azure

environment: ${{ needs.setupEnv.outputs.githubEnvironment }}

concurrency: ${{ needs.setupEnv.outputs.name }}

needs:

- setupEnv

env:

environment: ${{ needs.setupEnv.outputs.environment }}

runs-on: ubuntu-latest

steps:

- name: 'Checkout GitHub Action'

uses: actions/checkout@master

- name: Setup Node ${{ env.NODE_VERSION }} Environment

uses: actions/setup-node@v1

with:

node-version: ${{ env.NODE_VERSION }}

cache: 'yarn'

- name: 'Resolve Project Dependencies Using yarn'

shell: bash

run: |

yarn

yarn build

yarn test

- name: Azure Login

timeout-minutes: 5

uses: azure/login@v1

with:

creds: ${{ secrets.AZURE_CREDENTIALS }}

- name: Create infrastructure

run: infra/azure/apps/api/scripts/create-funcapp.sh

env:

DB_PASSWORD: ${{ secrets.DB_PASSWORD }}

- name: Install zip

uses: montudor/action-zip@v1

- name: 'Deploy to Azure Functions'

run: infra/azure/apps/api/scripts/deploy-funcapp.sh

- name: Azure Logout

if: ${{ always() }}

run: |

az logout

az cache purge

az account clearFinal thoughts

This guide is a product of many trials and errors I have made attempting to find a working solution for automatically deploying a function app to Azure Functions, with specific issues related to the Consumption Plan on Linux. It's by no means the best possible solution. It works but should be improved.

One thing that really bugs me is that the resources are not created in a transactional fashion. Even within a single ARM template, if an error happens while provisioning a nested resource, such as a database configuration item, whatever happened before that is kept in place.

This means that a resource can exist in an inconsistent state, potentially missing vital configuration or being exposed to exploits.

This is exacerbated by the fact we need to run multi-step procedural scripts to actually deploy the application code. The definition for code deployment would ideally look just like the ARM templates that lay out the desired state of a given resource - declaratively, not procedurally.

With the caveats admitted and even emphasized, I still enjoy this way of managing and deploying code and all its dependencies way more than I do logging into a Linux server with SSH and running the usual suite of tools - apt-get, vim configfile, etc.

Once you have figured out the pipeline and all its quirks, you save tons of time worrying about infrastructure, which you can invest more productively.

Hopefully, this has been useful to you, and if you have any questions or suggestions for improving the setup, be sure to leave a comment below!

P.S.

If you feel like we'd get along, you can follow me on Twitter, where I document my journey.

Published on